The ELK stack consists of Elasticsearch, Logstash, and Kibana that many companies are using to centralize their valuable data. These are the three different open source products that are most commonly used in log analysis in IT environments. The ELK Stack is popular because it fulfils a need in the log analytics space. The ELK stack makes it way easier and faster to search and analyze large data sets when the blazing-fast analytics grow larger. We can say that ELK is quite versatile, we can use the stack as a stand-alone application, or integrate with existing applications to get the most current data. With Elasticsearch, you get all the features to make real-time decisions-all the time. You can use each of these tools separately, or with other products.

Logstash collects and parses logs for future use, then Elasticsearch indexes and stores the information. Kibana then presents the data in visualizations on a web interface that provide actionable insights into one’s environment. The ELK stack allows identification of issues that span multiple servers by correlating their logs during a specific time frame. That makes the centralized logging very useful when attempting to identify problems with your servers or applications that allows searching through all logs in a single place.

System Resources

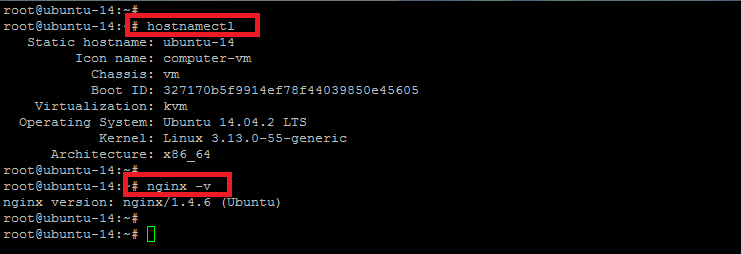

In this article we will be using Ubuntu 14 with 2 GB RAM , 1 CPU and 20 GB of free disk space on test server. You can increase your resources depending upon the volume of logs in your infrastructure. Let’s access your Ubuntu server and create non-root sudo user to perform the installation step.

First of all, in order to make sure that your system is updated, run the following command to install missing patches and security updates.

$ sudo apt-get update

Installing JAVA Oracle 8

Before moving towards the installation of ELK, we need to install JAVA because of the basic requirement to run Elasticsearch and Logstash. So, we are going to install Java Oracle 8 but you can also install OpenJDK if you don’t want Oracle.

To install Java Oracle 8 on Ubuntu 14.04, first you need to add its PPA repository to ‘apt’ and you need to update your system once again.

$ sudo add-apt-repository -y ppa:webupd8team/java $ sudo apt-get update

After the system update you are now able to install Oracle JAVA 8 using the following command.

$ sudo apt-get -y install oracle-java8-installer

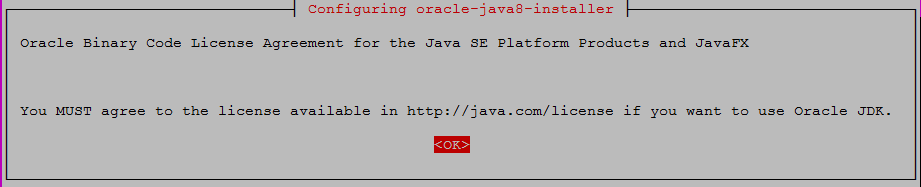

In order to install this package, you must accept the license terms, the Oracle Binary Code License Agreement for the Java SE Platform Products and JavaFX. Not accepting will cancel the installation.

Once the installation is complete, you will have Oracle JAVA 8 installed on your system. You can check its successful installation using the command below to find the installed JAVA version.

$ java -version

Installing Elasticsearch

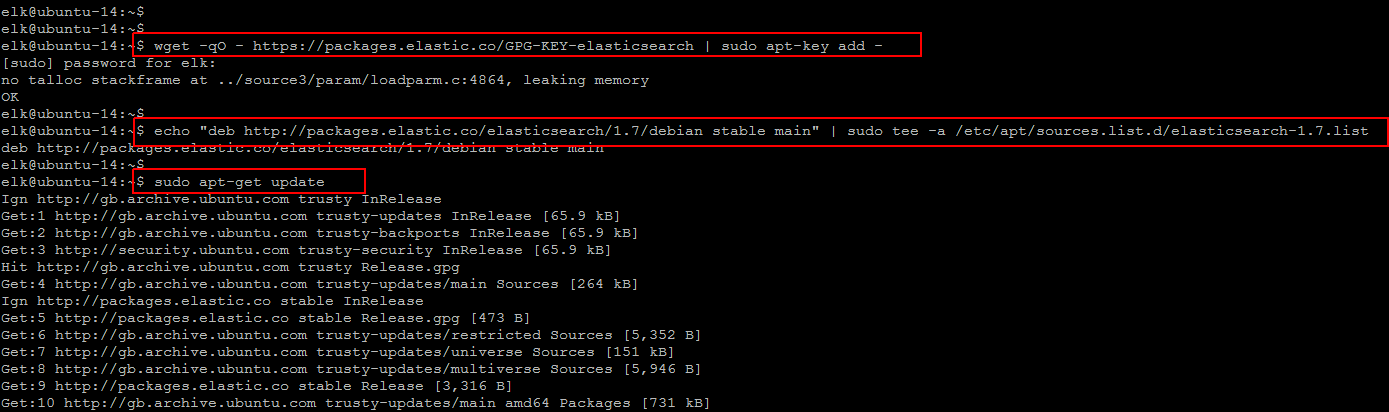

To start the installation of Elasticsearch, first you need to add its repositories from their official website http://elastic.co to download and install its Public Signing Keys for Elasticsearch. So, run the following command to import the Elasticsearch public GPG key into apt and then create the Elasticsearch source list before updating your system as shown.

$ wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add - $ echo "deb http://packages.elastic.co/elasticsearch/1.7/debian stable main" | sudo tee -a /etc/apt/sources.list.d/elasticsearch-1.7.list $ sudo apt-get update

Now execute the command to install Elasticsearch using ‘apt-get’ command.

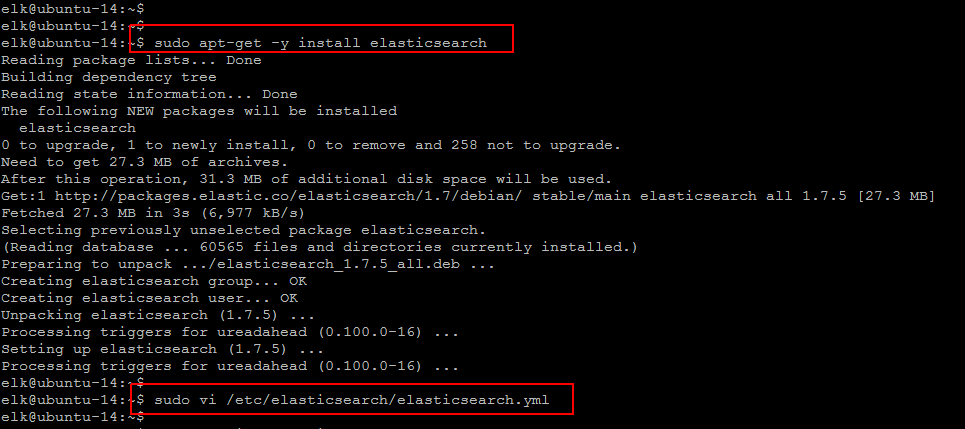

$ sudo apt-get -y install elasticsearch

Once the package is installed, we will configure it to restrict its outside access to Elasticsearch instance on port 9200, so outsiders can’t read your data or shutdown your Elasticsearch cluster through the HTTP API.

$ sudo vi /etc/elasticsearch/elasticsearch.yml network.host: localhost

To install, it is possible to use Logstash to gather logs of all types, but we will limit the scope of this tutorial to syslog gathering.

Save the configuration, save to start Elasticsearch and configure it to start on boot up using the following command.

$ sudo service elasticsearch restart $ sudo update-rc.d elasticsearch defaults 95 10

Installing Kibana

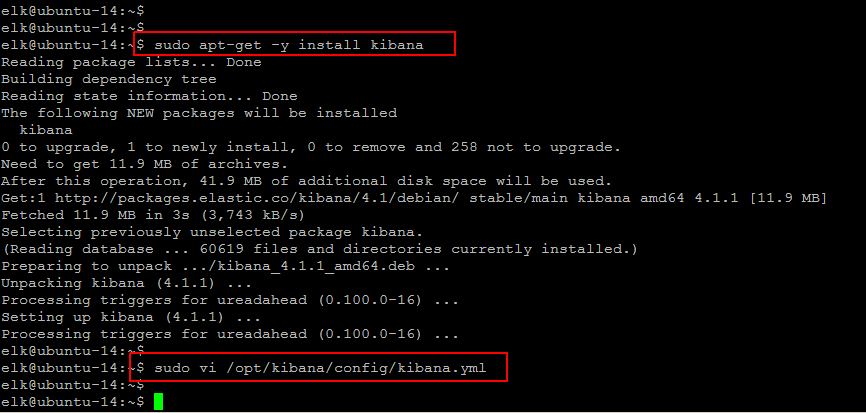

After successful installation of Elastciseach, now we are going to install Kibana. Let’s run the following command to add Kibana package to the source list using package manager then update using system with the following commands.

$ echo 'deb http://packages.elastic.co/kibana/4.1/debian stable main' | sudo tee /etc/apt/sources.list.d/kibana.list $ sudo apt-get update

After system update run the following command to install Kibana on Ubuntu 14 and then configure it to make it only accessible from localhost by updating its default configuration file using any of your editor. To allow external access we will be installing Nginx Reverse proxy, on the same server.

$ sudo apt-get -y install kibana $ sudo vi /opt/kibana/config/kibana.yml host: "localhost"

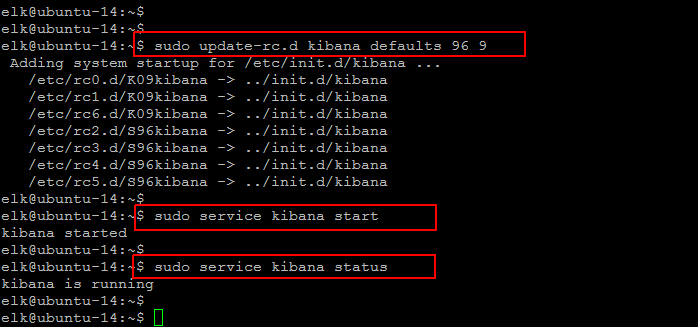

Close the file after saving the configuration file and run the following command to enable it on boot up and then start its service.

$ sudo update-rc.d kibana defaults 96 9 $ sudo service kibana status

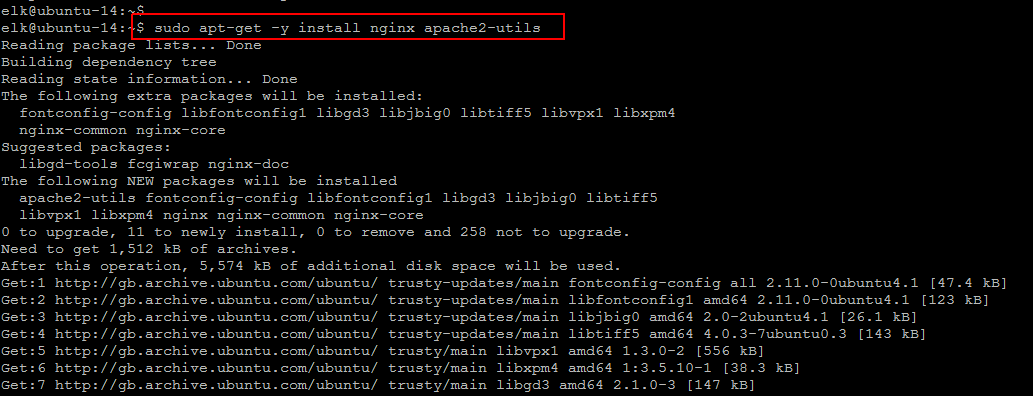

Installing NGINX

Now we have to set up a reverse proxy using Nginx before we can use the Kibana web interface and to allow external access to it, because we had configured Kibana to listen on localhost. Let’s run the following command using ‘apt’ to install Nginx and Apache2-utils.

$ sudo apt-get -y install nginx apache2-utils

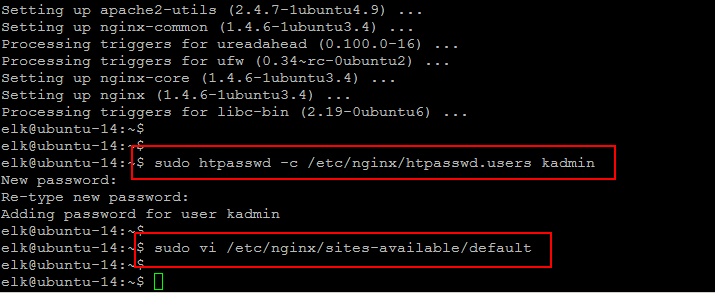

To access the Kibana web interface we will use ‘htpasswd’ command to create an admin user like ‘kadmin’, you can choose any user and setup its password on the next prompt after running the below command.

$ sudo htpasswd -c /etc/nginx/htpasswd.users kadmin

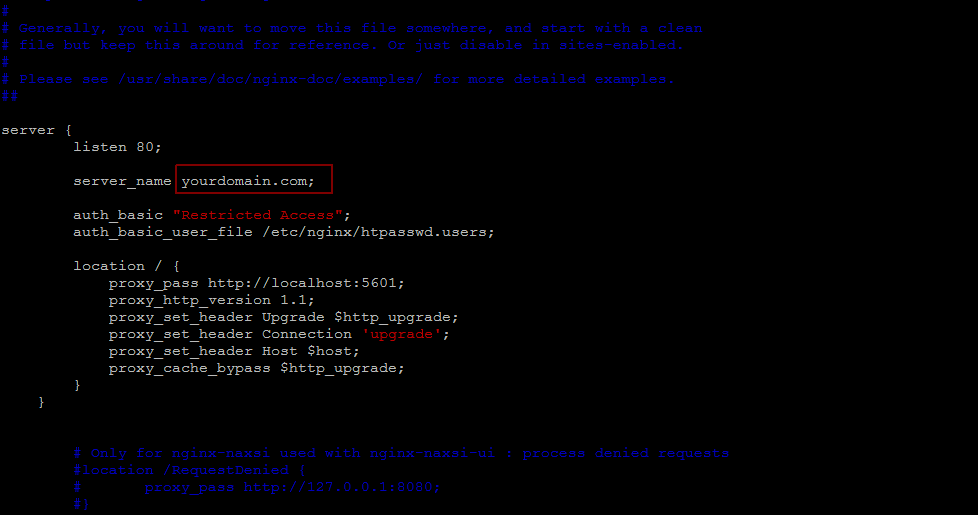

Then open the Nginx default server configuration file in your favorite editor to make the following changes that will configure Nginx to direct your server’s HTTP traffic to the Kibana application listening on localhost:5601. Nginx will also be used as the htpasswd.users file, that we created earlier, and require basic authentication.

$ sudo vi /etc/nginx/sites-available/default

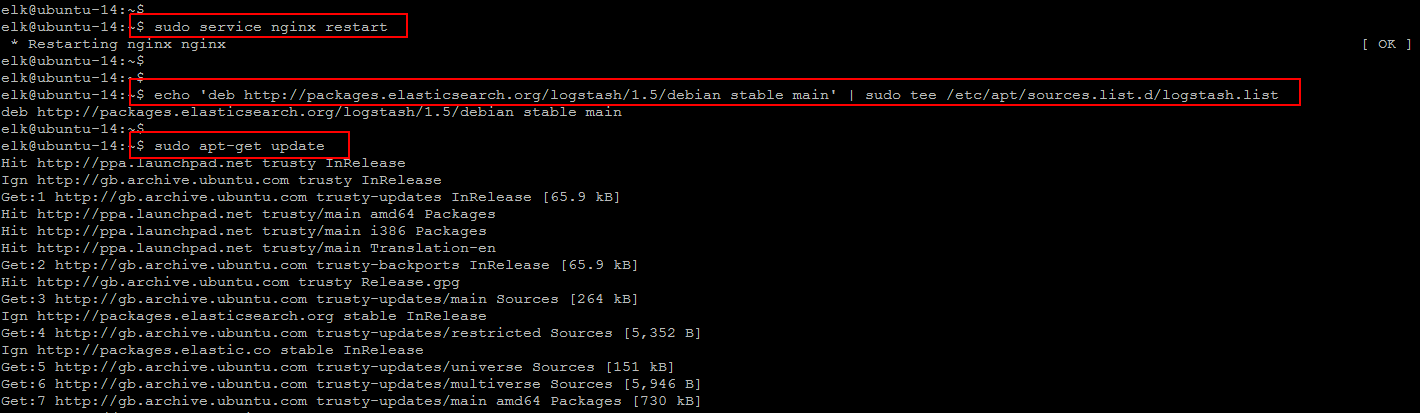

After saving changes restart Nginx service to put our changes into effect using the following command that gives you OK status meaning your Nginx is working fine.

$ sudo service nginx restart

Now If you open a web browser, after entering the “kadmin” credentials, you should see a Kibana welcome page which will ask you to configure an index pattern. But we’ll get back to that later, after installing all of the other components.

Installing Logstash

The installation of Logstash will be used to centralize the data processing of logs and other events from other sources. Get the Logstash Installation package by adding it to the source list.

$ echo 'deb http://packages.elasticsearch.org/logstash/1.5/debian stable main' | sudo tee /etc/apt/sources.list.d/logstash.list $ sudo apt-get update

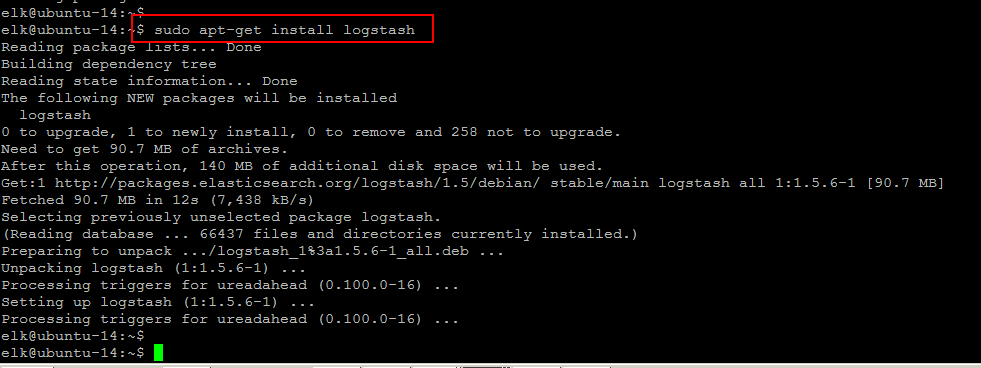

$ sudo apt-get install logstash

Generating SSL Certificates

We are going to use Logstash Forwarder to ship logs from our Client Servers to our Logstash Server. So we need to create an SSL certificate and a key pair. Whereas the certificate is used by the Logstash Forwarder to verify the identity of Logstash Server.

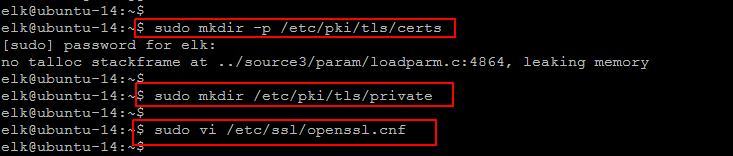

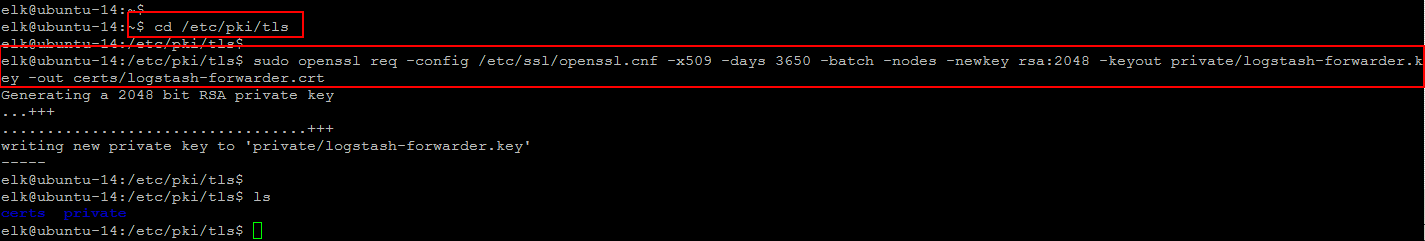

Run the below commands to create the directories that will store the certificate and private key.

$ sudo mkdir -p /etc/pki/tls/certs $ sudo mkdir /etc/pki/tls/private

Now generating your SSL certificates. If you have a DNS setup that will allow your client servers to resolve the IP address of the Logstash Server user your FQDN, else, you can use your IP address. You will have to add your Logstash Server’s private IP address to the subjectAltName (SAN) field of the SSL certificate that we are about to generate.

To do so, open the OpenSSL configuration file and find the [ v3_ca ] section in the file to add following line under it that consists of Logstash Server’s private IP address.

$ sudo vi /etc/ssl/openssl.cnf [ v3_ca ] subjectAltName = IP: logstash_server_ip

Then generate the SSL certificate and private key in the appropriate locations (/etc/pki/tls/), with the following command.

$ cd /etc/pki/tls $ sudo openssl req -config /etc/ssl/openssl.cnf -x509 -days 3650 -batch -nodes -newkey rsa:2048 -keyout private/logstash-forwarder.key -out certs/logstash-forwarder.crt

This generated logstash-forwarder.crt file will be copied to all of the servers that will send logs to Logstash that we will be doing later on after Logstash configuration.

Configuring Logstash

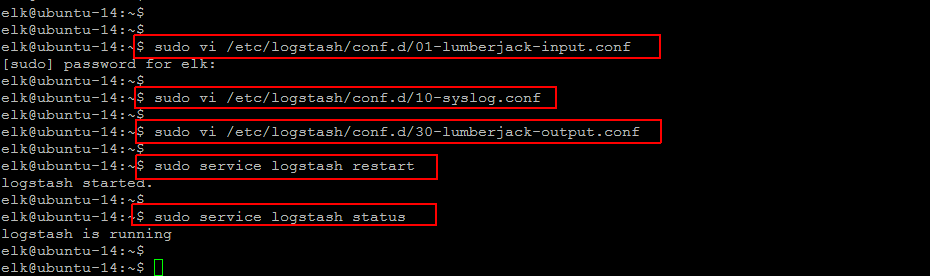

Logstash configuration files reside in ‘/etc/logstash/conf.d’ directory in the JSON-format which consists of three sections that is inputs, filters, and outputs.

Let’s create a configuration file called ’01-lumberjack-input.conf’ and set up our “lumberjack” input which is the tcp protocol listening to port ‘5043’ that Logstash Forwarder uses by adding the following contents in it.

$ sudo vi /etc/logstash/conf.d/01-lumberjack-input.conf input { lumberjack { port => 5043 type => "logs" ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt" ssl_key => "/etc/pki/tls/private/logstash-forwarder.key" } } :wq!

After this create a configuration file where we will add a filter for syslog messages called ’10-syslog.conf’ and put the following syslog filter contents that will look for logs labeled as “syslog” type (by a Logstash Forwarder), and it will try to use “grok” to parse incoming syslog logs to make it structured and query-able.

filter {

if [type] == "syslog" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

syslog_pri { }

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

:wq!

Now create one more configuration file called ’30-lumberjack-output.conf’ and put the following output configurations that basically configures Logstash to store the logs in Elasticsearch. After this configuration Logstash will also accept logs that do not match the filter, but the data will not be structured and appear as flat messages like source IP addresses, served files, etc.

$ sudo vi /etc/logstash/conf.d/30-lumberjack-output.conf output { elasticsearch { host => localhost } stdout { codec => rubydebug } :wq!

Now our logstash configurations are complete, in order to make them effective we are going to restart its services using the command below.

$ sudo service logstash restart

Logstash Forwarder Setup on Client Server

To send logs to your Logstash Server we need to do the following steps for each Ubuntu or Debian server that you wish to send its logs to the centralized ELK server.

Copying SSL Certificate to Client

On the client server, our first step is to copy the Logstash SSL certificate from the ELK server to the Client server using the following ‘scp’ command.

elk@ubuntu-14:~$ scp /etc/pki/tls/certs/logstash-forwarder.crt user@your_client_server:/tmp

Make sure that the certificate has been installed successfully. That requires the communication between the client servers and the Logstash server.

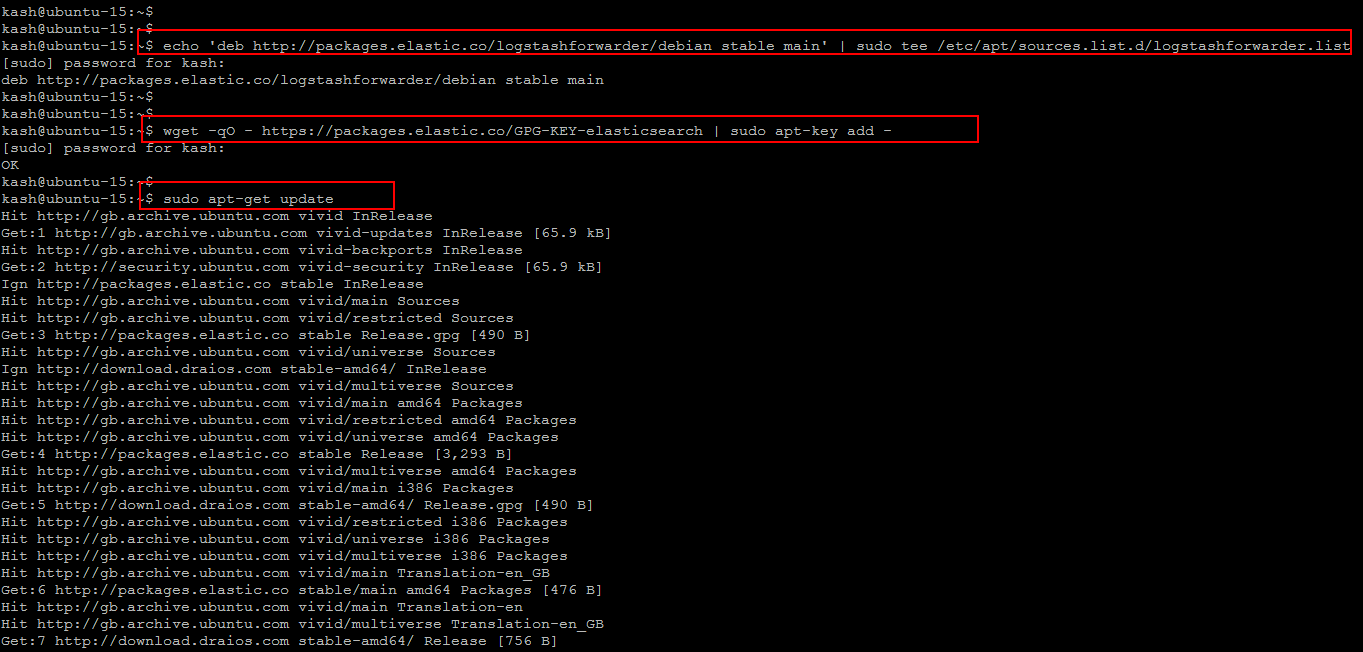

Installing Logstash Forwarder on Client

Run the following command to create the Logstash Forwarder source list on your Ubuntu client server.

$ echo 'deb http://packages.elastic.co/logstashforwarder/debian stable main' | sudo tee /etc/apt/sources.list.d/logstashforwarder.list

Then import the Elasticsearch public GPG key into apt repository that uses the same GPG key as Elasticsearch as shown below.

$ wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

Now run the command to update your system.

$ sudo apt-get update

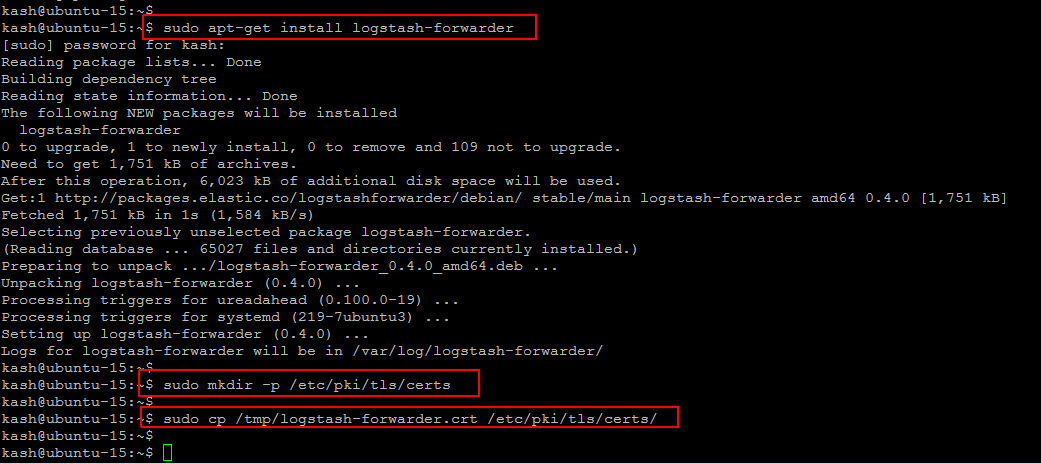

Now you can install logstash forwarder package by simply running the following command.

$ sudo apt-get install logstash-forwarder

Once installation complete, then copy the Logstash server’s SSL certificate from the ‘tmp’ directory into the appropriate location that is in ‘/etc/pki/tls/certs’ directory.

$ sudo mkdir -p /etc/pki/tls/certs $ sudo cp /tmp/logstash-forwarder.crt /etc/pki/tls/certs/

Configuring Logstash Forwarder

Let’s create and edit Logstash Forwarder configuration file, which is in JSON format on the Ubuntu Client server using your editor and put the following contents under its ‘Network’ section.

$ sudo vi /etc/logstash-forwarder.conf "servers": [ "logstash_server_ip:5043" ], "ssl ca": "/etc/pki/tls/certs/logstash-forwarder.crt", "timeout": 15

This will configure Logstash Forwarder to connect to your Logstash Server on port 5043 (the port that we specified an input for earlier), and uses the SSL certificate that we created earlier. Now go under files section and add the following lines.

{

"paths": [

"/var/log/syslog",

"/var/log/auth.log"

],

"fields": { "type": "syslog" }

}

The paths section specifies which log files to send, and the type section specifies that these logs are of type “syslog*. Now save and quit file using ‘:wq!’ and restart Logstash Forwarder to make our changes effective.

$ sudo service logstash-forwarder restart

Logstash Forwarder service is working fine and sending ‘syslog’ and ‘auth.log’ to the Logstash Server. You can repeat this section for all of the other servers that you wish to gather logs.

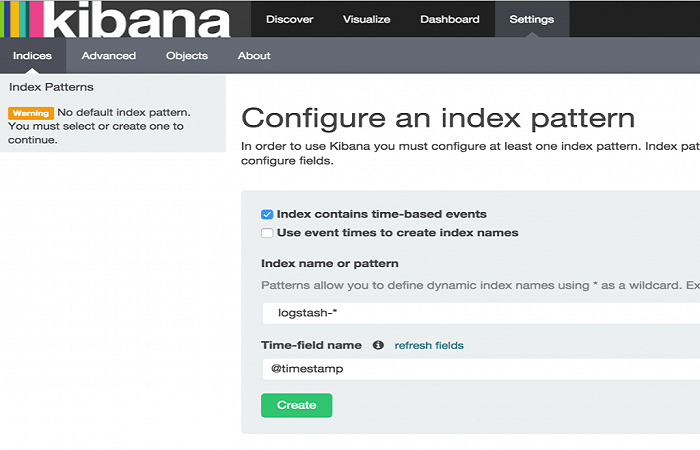

Connecting to Kibana

After setting up Logstash Forwarder on the client servers, now look at Kibana’s web interface that we installed earlier. Go to the FQDN or IP address of your Logstash Server on a web browser. After giving the “kadmin” credentials, you will see a page prompting you to configure an index pattern as shown in the image below.

http://your_servers_ip:5601/

Index patterns are used to identify the Elasticsearch index to run search and analytics against. They are also used to configure fields. Kibana makes an educated guess on your index and time field names, so selecting “Create” here will get you started. Now if you click the ‘Discover’ link at the top of the navigation bar, that will show you all of the log data over the last 15 minutes. Similarly, you add multiple index patterns and then customize your dashboard settings to visualize your centralized log server.

Conclusion

In this article you have learned the complete installation settings and configurations of ELK stack on Ubuntu 14 with an addition Logstash Forwader installation on Ubuntu 15 client server. There are a couple of plugins available that you can choose and install, of your own choice, to use ELK on your web browser. So now the journey with Centralized Logs Server has been started, there are a still couple of things to do for gathering and filtering the specific logs to create our customized dashboards. We hope you have found this article helpful. Don’t forget to leave us your comments or contact us in case of any difficulty.