AI workloads demand scale, speed, and control. See how Atmosphere helps teams ditch vendor lock-in and run GPU-hungry models on their own terms.

AI is advancing at a breakneck pace, and infrastructure is struggling to keep up. Training a single large-scale AI model today can require thousands of GPUs running for weeks costing millions in compute power alone.

In fact, OpenAI’s GPT-4 reportedly took 90 days to train on an array of 25,000 GPUs. The more AI models scale, the more compute resources they consume, putting immense pressure on cloud strategies to remain cost-efficient, scalable, and future-proof.

But that’s not the only problem. AI infrastructure is also becoming increasingly fragmented. Many enterprises rely on proprietary cloud AI services, which offer convenience but lock organizations into closed ecosystems with rising costs. The price of training AI models in the public cloud has skyrocketed, with GPU instance costs increasing on average by 40% in just one year.

Moving workloads between different clouds or from cloud to on-prem can be a logistical nightmare, requiring costly rearchitecting.

That’s why AI-driven businesses are turning to open-source cloud platforms. By using technologies like OpenStack and Kubernetes, organizations can build AI infrastructure that is scalable, flexible, and completely under their control.

Atmosphere, a production-grade OpenStack distribution, enables enterprises to deploy, scale, and optimize AI workloads on their terms.

Why Open Source Is Reshaping AI Cloud Strategies

AI models require substantial computational power, particularly for training deep neural networks and running inference workloads in production. But deploying AI applications efficiently is not just about raw compute capacity. It requires an infrastructure that seamlessly integrates compute acceleration, orchestration, networking, and storage while maintaining operational flexibility.

Public cloud providers offer managed AI services, but they come with trade-offs. GPU access often comes with markup-heavy pricing, and proprietary machine learning pipelines lock businesses into specific ecosystems. Migrating workloads away from these environments can be expensive and time-consuming. A cloud built on open-source technologies removes these restrictions, allowing organizations to deploy AI workloads on their own terms whether in private data centers, hybrid clouds, or multi-cloud environments.

This flexibility is even more critical when considering that nearly 83% of container costs are associated with idle resources. Proprietary cloud platforms make this inefficiency even worse by forcing users into predefined instance sizes and configurations.

With Atmosphere, organizations can precisely tailor their compute resources.

Compute Power That Keeps Up with AI Workloads

Training AI models at scale requires high-performance compute resources, particularly GPUs. Unlike CPUs, which process tasks sequentially, GPUs execute thousands of parallel operations, making them ideal for deep learning. However, maximizing GPU efficiency depends on how cloud infrastructure is designed.

Atmosphere enables AI applications to tap directly into GPU resources using PCI passthrough, ensuring that workloads have unrestricted access to hardware acceleration. Instead of relying on cloud provider-managed instances with limited flexibility, organizations can allocate dedicated or shared GPUs based on workload requirements. This approach prevents resource bottlenecks, reduces overhead, and ensures low-latency AI processing.

But raw compute power isn’t enough, AI workloads need effective orchestration. Kubernetes, deeply integrated into Atmosphere’s architecture, provides automated workload scaling and self-healing. As computational demands shift, Kubernetes dynamically adjusts resources, ensuring that GPUs are utilized efficiently while preventing unnecessary over-provisioning.

Since 54% of wasted container spend comes from oversized infrastructure, leveraging Kubernetes’ auto-scaling capabilities ensures that compute resources are only used when needed. This reduces unnecessary costs while maximizing AI workload efficiency.

Unlike traditional HPC environments that require extensive manual configuration, Atmosphere’s Cluster API driver for Magnum simplifies Kubernetes cluster deployment. AI teams can spin up GPU-backed Kubernetes environments in minutes, reducing time-to-insight and accelerating development cycles.

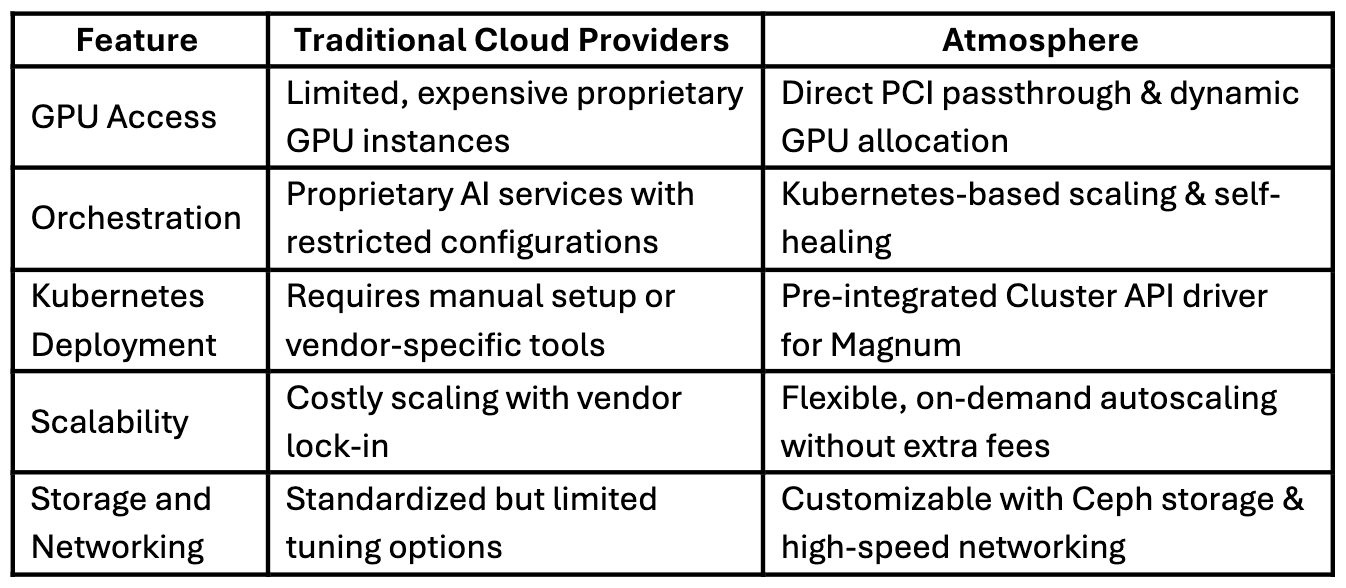

To illustrate the benefits, here’s how Atmosphere enhances AI infrastructure compared to traditional cloud environments:

By integrating these capabilities, Atmosphere offers full control over AI workloads, optimizing performance while maintaining cost efficiency.

Scaling AI Data with High-Performance Storage

AI models rely on fast, reliable access to large volumes of data, whether it's for training, checkpointing, or real-time inference. To support this, high-throughput, low-latency storage is essential.

Atmosphere uses Ceph-powered block storage to provide quick, dependable access to both structured and unstructured data. Although compute and storage nodes remain separate, the platform is built to reduce latency and maintain high data transfer rates between them.

With better data locality, teams can access training sets, checkpoints, and inference outputs more efficiently, cutting down on unnecessary transfers and delays, especially for GPU-driven workloads.

Ceph’s ability to scale horizontally means the storage layer can grow alongside your AI models. As workloads become more complex, there’s no need to rework the infrastructure.

Since 29% of container costs come from workload idle (where resources are over-provisioned) efficient storage management prevents unnecessary resource waste, further optimizing AI performance. Teams can continue to support expanding AI use cases without overprovisioning or relying on external storage providers. This also helps them gain complete control over how AI data is stored, replicated, and retrieved.

Optimized Networking for AI Workloads

Training large AI models requires moving massive volumes of data between compute, storage, and accelerator nodes with both speed and reliability. In traditional environments, network congestion and latency can slow down distributed training and inference.

Atmosphere uses advanced networking technologies like SR-IOV, DPDK, and ASAP² to minimize virtualization overhead and support high-speed, low-latency data transfer. These capabilities are especially useful for GPU-heavy workloads, enabling more efficient data flow between GPUs, storage, and compute resources.

Networking in Atmosphere is built on OVN, a fully distributed virtual networking layer. This architecture removes centralized bottlenecks and allows compute, storage, and containerized workloads to communicate and stay in sync across complex, distributed setups.

The control plane, managed through Kubernetes and OpenStack, includes high availability, auto-healing, and rolling upgrades by default. These features keep orchestration and monitoring systems active, so AI workloads can recover from disruptions and continue without manual effort.

The AI Cloud That Works for You

AI infrastructure isn’t static. Models evolve, frameworks improve, and new hardware optimizations emerge every year. Sticking to a proprietary cloud means waiting for vendors to catch up. With an open-source cloud, businesses can adapt on their own terms.

Atmosphere keeps AI infrastructure up to date by integrating the latest OpenStack and Kubernetes advancements. Kubernetes clusters can be upgraded in-place, ensuring AI workloads continue running without downtime.

Instead of waiting for cloud providers to roll out support for new GPUs, AI teams using open-source infrastructure can immediately adopt the latest optimizations whether it’s a new scheduling algorithm, AI pipeline framework, or cloud-native ML tool.

Ready to take control of your AI cloud strategy?

Whether you’re scaling deep learning workloads, optimizing inference pipelines, or looking for a vendor-free cloud solution, Atmosphere provides the tools to build an AI infrastructure that works for you. Get in touch today to explore how an open-source AI cloud can drive efficiency, flexibility, and long-term success.